As Interior Minister Miguel Rodriguez Torres and the Venezuelan Violence Observatory battle it out over the 2013 murder figures, most Venezuelans shrug their shoulders and believe who they want to believe. Government supporters instinctively trust the minister, while the opposition takes it for granted that the Observatory’s much higher estimate must be right.

Oddly, in this hyperpolarized environment, the quality of that Venezuelan Violence Observatory (OVV) number has not gotten much scrutiny — and the OVV figure is not what it seems. It is not a body count based on leaked government data, nor is it an estimate constructed from a proprietary survey. It is a forecast based on past trends — a slightly more sophisticated version of the XKCD method.

This article first appeared on the Caracas Chronicles blog. Read the original here.

In fact, the methodology section of OVV’s latest press release raises more questions than it answers. The forecasting techniques they mention require data both on past forecasts — i.e., 2010’s guess for 2011 — and on actual realized past values — i.e., the actual number of violent deaths in 2011. But since, by OVV’s own account, they don’t have access to reliable counts for at least the past five years, it is not clear what they’re using as model inputs. There is an oblique reference to “partial data from diverse regional and national sources,” but it’s not clear what those data are or how they are used.

I reached out to Roberto Briceño Leon for clarification [disclosure: Briceño Leon is a friend and I had him read a draft of this post before publishing], hoping to be told, “No, chama, you misunderstood; our number is based on data from such-and-such source.” Nope. Instead, he said that my methodological criticisms were valid, adding, “Our methodology isn’t perfect, and it doesn’t meet all the standards, but there is no other way to get information in this darkness — this is the little light we have.” In other words: without reliable government statistics, what do you want from us except a simple forecast?

SEE ALSO: Venezuela News and Profiles

Well, let’s see: for starters, I’d like OVV to publish rather than hide the accuracy (or lack thereof) of their estimate. For instance, they tell us that “we can state the range of our affirmations with a 95 percent confidence level,” but this does not really make any sense, because they are not publishing a range, they are publishing a number! That point estimate without a confidence interval is close to meaningless. Are they 95 percent sure that the interval 75/100,000 to 83/100,000 contains the true homicide rate? Or are they 95% sure that it is between 20/100,000 and 138/100,000? For all we know, the government’s figure — 39/100,000 — is within OVV’s range.

Then they compound the problem by reporting what they have already told us is an estimate with extreme numerical precision — 24,763 deaths, rather than 24,762 or 24,764 — lending the figure an artificial air of exactitude. Not amusing.

And there is a further methodological pastiche that has to do with the inconsistent use of “homicides” and “violent deaths,” which look like synonyms but definitely are not. (For instance, if you’re killed resisting arrest, that doesn’t count as a murder for Rodriguez Torres’ purposes.) OVV used to report homicides, but their 2013 press release actually refers to violent deaths. So we end up with a case of apples and oranges: OVV’s 2013 (and 2012) estimates are not directly comparable to OVV estimates from previous years… or to Rodriguez Torres’ number.

These flubs are troubling because OVV insistently bills itself as an academic institution, which gives their estimates a scientific aura. That press release refers to “investigators from the seven national universities that make up the Venezuelan Violence Observatory,” and Briceño-León is a respected and prolific professional sociologist. But claims to scientific status depend crucially on publishing detailed descriptions of methodology, allowing other researchers to scrutinize and replicate results. Why does OVV not do this?

And, if OVV is wrong, what’s the real story?

Surely, we cannot just trust the minister’s number: with even less information about how Rodriguez Torres’s people arrived at their figure (insert obligatory condemnation of CICPC censorship), that would be foolhardy. So is there any reason — other than Rodriguez Torres’s say-so — to believe that the worst of the violence nightmare might actually be behind us?

In fact, I see three reasons to suspect that the answer might be yes … and none of them have to do with Plan Patria Segura.

First, little noted in the Guerra de Cifras (War of Statistics), there actually is a third source of info on all this: the Ministry of Health (MPPS), which compiles and publishes birth and death records. And, perhaps surprisingly, the MPPS data show the violent death rate falling in 2009, 2010, and 2011 (the last available year; 2012 and 2013 haven’t been posted yet).

Of course, MPPS officials might have manipulated the data for political reasons — but if they did, they did so carefully, without leaving obvious tracks in the underlying micro data (which I have worked with for research purposes). Naturally, there is a lot of room for error — and potential bias — in the way MPPS codes the death certificates that give rise to this data. Still, much of the administrative process is separate from the process that produces the CICPC data, which makes it better than nothing as a third source. Moreover, there is not an obvious basis to fudge it, since this data is so far off the political radar screen.

But let’s assume you just refuse to take any number the government publishes seriously on principle. Then are we stuck with the XKCD method?

Not at all. Rather than forecast the homicide rate based exclusively on the past trend — which, for OVV, is itself a series of rough guesses — you might try estimating the homicide rate based on hypothesized covariates. Roberto Briceño-León, for example, has made quite clear that he views impunity as a key driver of the violent crime trend; he might therefore try to look at data on policing or apprehension rates.

Or take Quico’s hypothesis, which is that the volume of cocaine trafficked through Venezuela drives the homicide rate — not, as in Mexico, because cartels fight for the international trafficking business (Venezuela has only one big cartel), but because some of the cocaine makes its way to the domestic market, where street gangs fight for the local retail trade. If you believe this story, you might note that trafficking has declined (pdf) as demand in the United States falls, and that could account for lower violence in Venezuela.

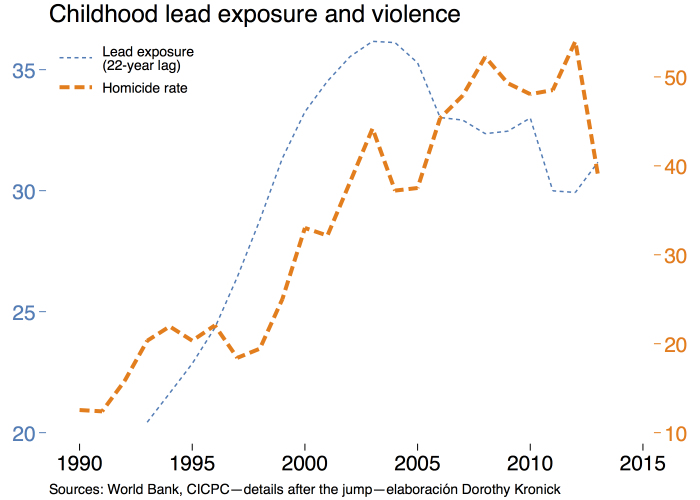

Other research links homicide rates to childhood lead exposure, pointing to evidence that the decline in leaded gasoline use in the United States in the 1970s produced the decline in US homicide rates in the 1990s.

This is yet another reason that Venezuela’s homicide rate might be declining: the childhood lead exposure of Venezuela’s malandreable-age** cohort rose sharply through the 1990s (as crime increased) and then recently began to drop.

My point isn’t that either lead poisoning or cocaine trafficking is definitely responsible for the violence wave; these assertions would require serious quantitative evidence. My point is that there are lots of data researchers consider when trying to explain trends in homicide rates. Longstanding trends can turn, sometimes for reasons that have nothing to do with law enforcement.

But no new trends could show up in an OVV estimate that limits itself to extrapolating data from years when murder rates were rising. Actually, it’s not even clear why they needed to wait until December to publish their 2013 number: if all the data that went into their 2013 estimate was data they already had a year ago, why not publish the 2013 murder tally back then? For that matter, why not tell us right away how many homicides there will have been in 2014?

So now that I’ve tugged OVV’s ear for methodological opacity, I better tell you where I got all my data:

- Official/CICPC/MPPRIJ figures were published here by Ana Maria San Juan, for 1990–2011.

- For 2012 and 2013, I am taking the official homicide rate from press reports on official announcements (2012, 2013).

- MPPS publishes annual reports here. For 1999–2010, I am calculating the violent death rate based on the underlying data, but you can replicate the totals using just the data in the PDFs (which is what I do for 2011, since I don’t have that micro data yet) by adding the following causes of death (ICD-10 codes): X85-Y09, Y20, Y21, Y22, Y23, Y24, Y28, Y29, Y30, W32, W34, Y35, Y36.

- For population, I am using some INE data that I think has been removed from their website; happy to provide it by email.

- For cocaine seizures, 2007–2011 are here; 1980–2006 here.

- Childhood lead exposure is a back-of-the-envelope calculation: the product of road gasoline sold per capita (from WDI), percent of population that is urban (also WDI), and percent of road gasoline that’s unleaded (see p. 76 here).

*Dorothy Kronick is a PHD candidate researching violence in Venezuela.

** “Malandro” is a Venezuelan term for a “thug,” typically a male under 25 years old.

This article first appeared on the Caracas Chronicles blog. Read the original here.

- Venezuela

- Tools and Data